Transparency, Source Code Quality, and Metrics

In "Hello World: Being Human in the Age of Algorithms," Hannah Fry relates this story:

In 2012, a number of disabled people in Idaho were informed that their Medicaid assistance was being cut. Although they all qualified for benefits, the state was slashing their financial support – without warning – by as much as 30 percent, leaving them struggling to pay for their care. This wasn't a political decision; it was the result of a new 'budget tool' that had been adopted by the Idaho Department of Health and Welfare – a piece of software that automatically calculated the level of support that each person should receive.

...

Unable to understand why their benefits had been reduced, or to effectively challenge the reduction, the residents turned to the American Civil Liberties Union (ACLU) for help.

...

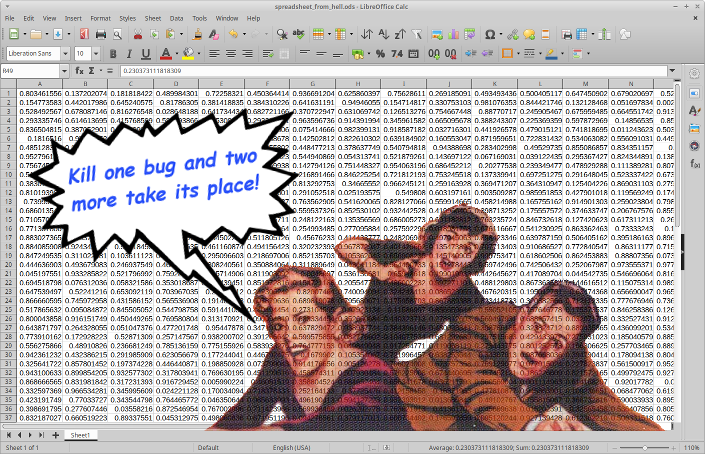

[The ACLU] began by asking for details on how the algorithm worked, but the Medicaid team refused to explain their calculations. They argued that the software that assessed the cases was a 'trade secret' and couldn't be shared. Fortunately, the judge presiding over the case disagreed. The budget tool that wielded so much power over the residents was then handed over, and revealed to be – not some sophisticated AI, not some beautifully crafted mathematical model, but an Excel spreadsheet.

Within the spreadsheet, the calculations were supposedly based on historical cases, but the data was so badly riddled with bugs and errors that it was, for the most part, entirely useless. Worse, once the ACLU team managed to unpick the equations, they discovered 'fundamental statistical flaws in the way that the formula itself was structured'. The budget tool had effectively been producing random results for a huge number of people. The algorithm – if you can call it that – was of such poor quality that the court would eventually rule it unconstitutional.

My first thoughts were, "How bad a spreadsheet hack do you gotta be to have your work declared unconstitutional? And just how many hacks does it take to build an unconstitutional spreadsheet?"

To be fair, math is hard. Government is complex. And I'm comfortable with the assumption that everyone who had a hand in building this spreadsheet had good intentions. Venturing a guess, the breakdown happened at the manager/politician/lawyer level.

It is probable that the complexity of the task quickly overtook the abilities of the spreadsheet author(s) and the capabilities of the tool. Eventually, no single person understood how the whole thing worked. Consequently, making a change in one place affected how the spreadsheet worked in n other places and no one was capable of regression testing the beast. But the manager/politician/lawyer types knew what to do: Hide behind the "trade secret" smoke.

There are many lessons from this story. Plenty of points of failure. What I'm interested in writing about is the importance of transparency and how a good set of performance metrics can help in maintaining transparency.

The externally facing opacity in this story is readily apparent. What we don't (and probably never will) see is the lack of transparency prevalent internally to the Idaho Department of Health and Welfare and whomever designed and built the spreadsheet tool. I'd bet a round of drinks that neither has heard of Agile much less employed its principles and practices. These by themselves - when actually practiced long term - go a long way toward establishing a culture of transparency. This is the key. Long term practice. A period of time is needed to change behaviors, mindsets, attitudes, beliefs, and when necessary, personnel. Even over the long term, implementing an Agile methodology isn't improvisational theater. A strategy and a way to measure progress is needed.

Which gets me to metrics.

Selecting metrics and tuning them over time is critical to measuring team performance and developing improvement plans. Metrics that inform meaningful actions are the goal. Leave the vanity metrics that verify what managers want to hear or already "know" to the competition.

I've encountered my share of overly complex ways to measure the performance of individuals and teams. Often the metrics taken from machine-like task work (for example, assembly line work) are applied to creative or intellectual/knowledge tasks. This type of re-purposing results in, for example, counting lines of code or the number of source code check-ins as an indicator of software developer productivity. It never ends well.

When working to define a set of metrics to track an individual or team's performance it is more effective to begin by asking several questions.

What problems are you trying to solve?

What questions will your chosen metrics answer?

What questions will your chosen metrics not answer?

How, specifically, will you know you can trust your metrics? How will you know when they are right and how will you know when they are wrong?

How well do your metrics compliment each other? That is, by combining them do you end up with a much better picture of individual or team performance the you do by considering individual metrics?

Do your metrics support any planned actions for improvement? Are you collecting actionable metrics or vanity metrics?

Finally, it is important to understand the limits of performance metrics. Displaying velocity charts that have fractions of story points implies an accuracy that simply isn't there. Significantly adjusting project timelines based on the first three sprints worth of velocity data can have adverse secondary effects on the project.

There is no perfect set of metrics, no divine set of measures that match an impossible standard of perfect objectivity and fairness. The best possible set of metrics is one that supports useful decisions rather than simply instructs managers where to apply the stick. They should help show the way to performance improvement rather than simply report results.

I work to have 3-5 metrics, depending on the individual, the team, and the project. Less than 3 and the picture starts to look rather flat. More then 5 and the task of performance monitoring can become overly complicated and cumbersome. Keep it lean and manageable. That way, it's easier to tell when things aren't working and your metrics are much less likely to violate your team's constitutional rights.